Dear delegators,

We are reporting that, during the second day of epoch 221 (evening/night of Oct 3/4), several minted blocks were not added to the chain. We are currently only halfway through the epoch, but this issue will likely result in lower rewards for this epoch (221). Here’s what went wrong, but let me first outline how our pool servers are set-up.

About Our Configuration

For security and reliability purposes, our servers are configured as follows:

- Location One: bare metal server for pools, behind two redundant local relays. Each relay keeps the pools synced, so that one relay can go down for maintenance, or fail without causing any interruptions;

- Location Two: same configuration as 1. In the event of power outage or Internet connectivity issues, each location can take over block production within minutes.

At the time of the anomaly, our pools were running on Location One.

Shortly after the start of epoch 221, all of our pools and relays were updated to the cardano-node software v1.20.0 (we usually wait until 1/3~2/3 of all SPO’s have updated without issues), and we manually verified that the first blocks were successfully minted and added to the chain. So far so good!

Besides keeping an eye on our nodes on a daily basis, we also have a system in place to automatically monitor the health of our pools and relays, and trigger an alert if needed. The alerts were configured as follows:

- Regular block count increase: if it takes unusually long for a pool to mint the next block (currently set to 8 hours for 4ADA, 16 hours for F4ADA and W4ADA);

- External peers: whenever the number of external peers of any relay drops below 10;

- Node RAM: whenever the RAM for any pool or relay exceeds 4GB (relay), 2GB (pool), or drops to zero;

- Node Residency: whenever the live data for any relay exceeds 1.5GB.

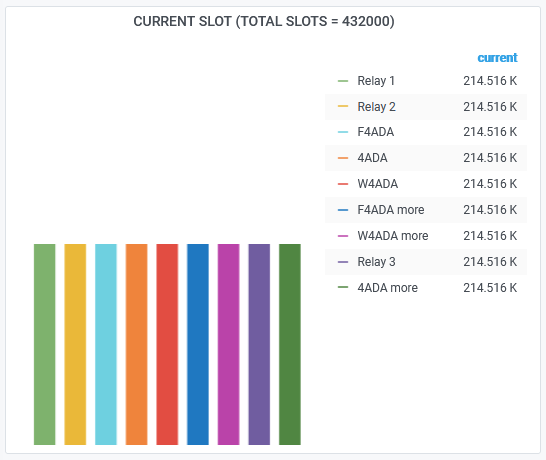

Also, we manually frequently verify that all of our pools, relays and passive nodes are in perfect sync, for which we grab the realtime data of each individual node as shown below:

What Happened?

During the second day of epoch 221 (evening/night of Oct 3/4), minted blocks were not added to the chain. When we discovered the issue by comparing our own pool block count with on-chain data from pooltool.io, we unfortunately had already lost a number of blocks as follows (also mentioning the expected blocks for the total epoch):

| LOST | EXPECTATION | % | |

| 4ADA | 4 | 30 | 13% |

| F4ADA | 5 | 10,6 | 47% |

| W4ADA | 2 | 6,6 | 30% |

We’ll have to wait for the epoch results to see whether our block assignment luck will alleviate of worsen the above negative impact on expected rewards…

What Went Wrong…

The connection-count is what probably must have gotten our relays into trouble. We are aware of the node vulnerability with regards to connection-count. Best practice dictates a maximum of 20 peers per node, and we always respected this limit very carefully. When the problem occurred, relay 1 had 16 external connections, plus 3 pools makes 19. Relay 2 had 13 + 3 = 16 connections. These numbers so far had never been a problem at all. Whether the latest 1.20.0 cardano-node software is more fragile in this regard remains to be seen, and we will address the issue with IOG.

We have now further reduced the number of connections to 13 (10 external plus 3 pools).

…And Will Not Happen Again

As explained above, we had been monitoring quite a few variables, none of which hinted us that something was going wrong, which can also be seen in the screenshot of our node monitoring, down below at the bottom of this post.

So, it seemed that all of our nodes were chugging along nicely: blocks were getting minted, all nodes were in perfect sync, relays were showing healthy connections to the outside world, and no apparent erratic behaviour was resulting in unusual memory consumption or CPU load (not shown in the figure below).

What we unfortunately did not notice, but would have given away that something was not in order, was the stagnation in processed transactions for both(!) relays and –as a result– our pools as well. In hindsight, this fairly straightfoward parameter (see bottom of chart below) probably should have been on our radar, and from now it certainly will be!

Take-away For You as a Delegator

We are taking our stakepool service most seriously and have put in place a substantial number of investments and precautions to deliver top-notch performance and rewards to our delegators. Nevertheless, a nasty hidden and simultaneous hick-up in two relays has managed to fool our monitoring and alert system, prompting us to make improvements in order to not let it happen again.

I hope that this detailed explanation of how our nodes are configured and what systems we have put in place to monitor their performance, can benefit other pool operators.

For you as a delegator, I hope our transparency helps to preserve or restore (if you are reading this after seeiing your latest rewards!

Sincerely,

Jos

P.S. Do you ever read about other pools losing some of their blocks? I’d be curious to know about any examples you may have. Our ROS so far has been well above average, so some pools may not have had any issues yet, but the vast majority with lower ROS… who knows?

Hi, what a pity , but not a disaster. I appreciate your explanation, although to technical for me as a dummy 😀 and your opennes and remedial action. On to the next blocks!🤛🏻

Many thanks Bas, it will affect this epoch only, no impact on future rewards!

Thanks Jos. I appreciate your explanation. My experience is that you’ve been transparent on a number of issues since the beginning of the test net. Thank you for your hard work. Looking through the windshield, not the rear view mirror!

Thanks. I think these sorts of errors probably happened, or will happen, to most other pools at some point as well. Truth is we probably don’t hear about them most of the time. But statistics don’t lie–it will take time, but great pools will always be able to show what they’re made of at some point 🙂

Congratulations Jos, despite the issue, your transparency and the way how you keep informed the stakeholders is a guarantee. Thanks for your hard work!

Many thanks Dave, onwards & upwards! 💪

We appreciate your honest explanation. The issue was handled professionally. Your thorough response to the problem has boosted my confidence in you, and 4ADA remains my stake pool of choice. Best wishes, Matt.

My ada has been and currently staked in whales4ada. I dont know why it keeps saying unknown pool in yoroi. Should I switch? Not sure what to do

Hi Rg,

I just checked and W4ADA showed up in my Yoroï just fine! It is also showing in Daedalus and on Pooltool and on Adapools.org, where Yoroï gets its data from: https://adapools.org/pool/bbfb301cad22e7a62f6fea6e3c93aec4e6f714aef66b0a828e792f12

Not sure how to solve your issue. Perhaps you can try to see if it shows up on a different device?

P.S. W4ADA’s variable fee has been reduced to 0%! 🙂